Things that shouldn't cohere. But do.

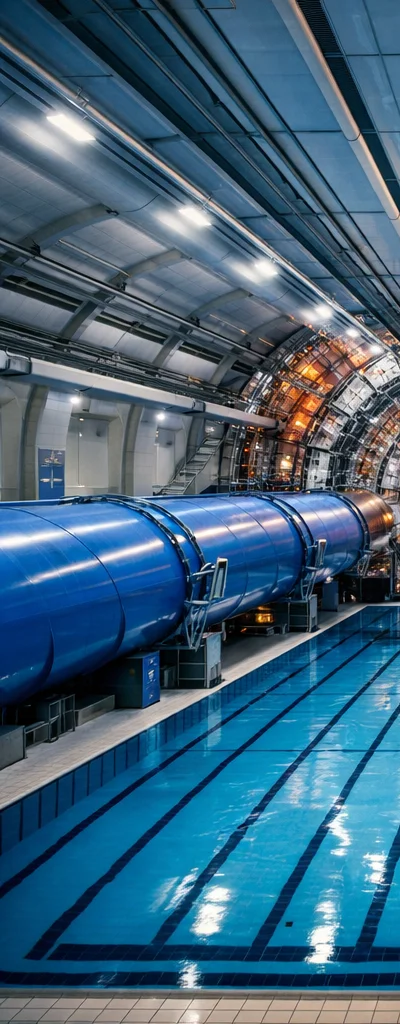

Empty Olympic pool + Particle accelerator

Geometry saw: contained energy, precise boundaries, human ambition at scale

Both are temples to measurement. Both demand absolute control of their environment. Both transform human limitations into something that transcends them.

Baroque opera house + Data center server farm

Both orchestrate complexity through rigid hierarchy

The opera house coordinates 100 musicians, 50 singers, lighting, staging. The data center coordinates 10,000 servers. Same constraint geometry. Different substrate.

Brutalist church + Neon Tokyo signage

Blade Runner exists because this coherence exists

Concrete spirituality meets electric transcendence. Cold geometry holding warm light. The sacred rendered in infrastructure. This is why cyberpunk works.

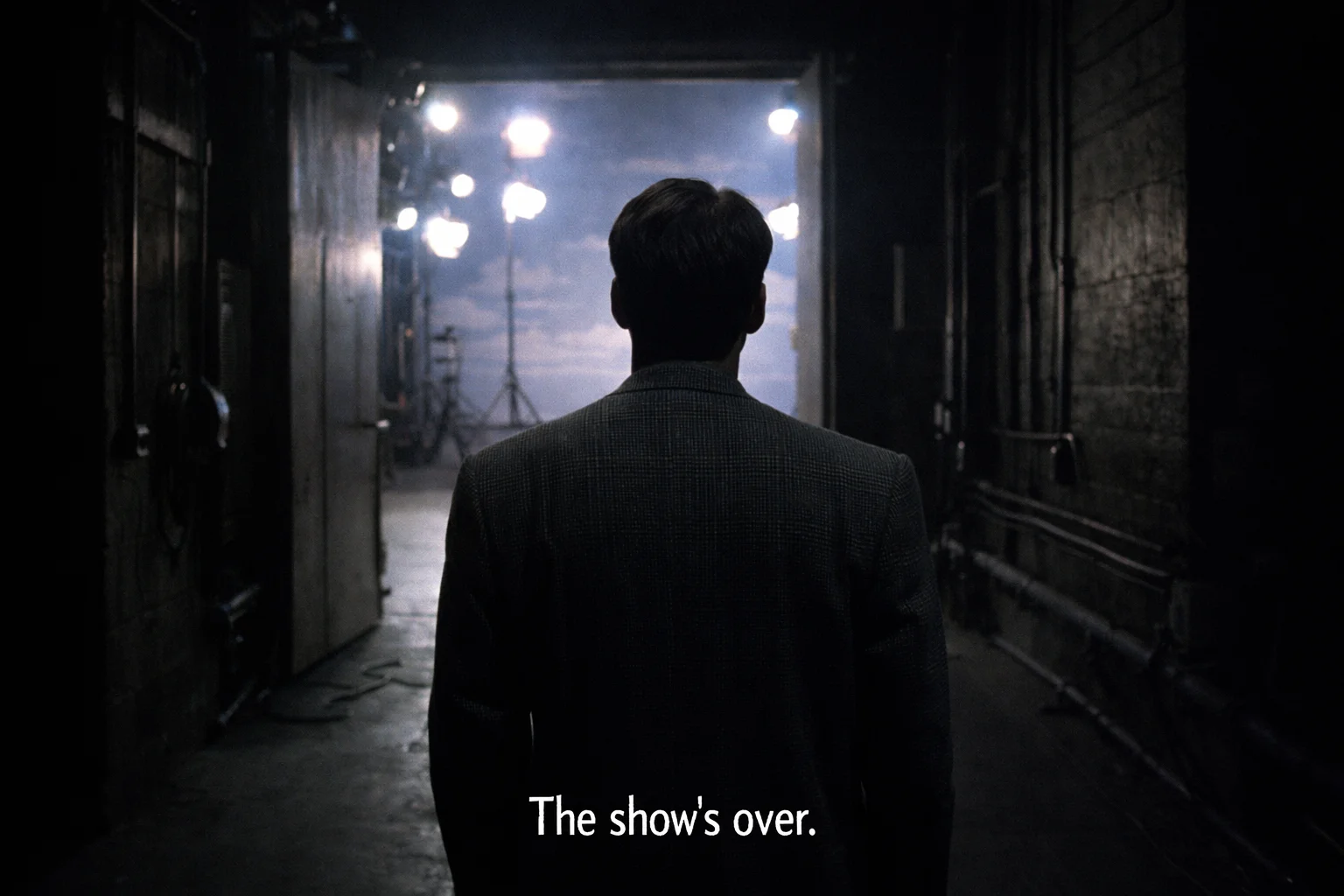

The Truman Show — What should his final line be?

ARBITER outranked the iconic catchphrase

The catchphrase creates ambiguity — is Truman still performing? "The show's over" claims authorship. It states truth without explanation. It works for all three audiences. Geometry understood narrative closure better than sentiment.

Geometry finds doors in semantic space you didn't know existed.